3.4 KiB

Current authentication process

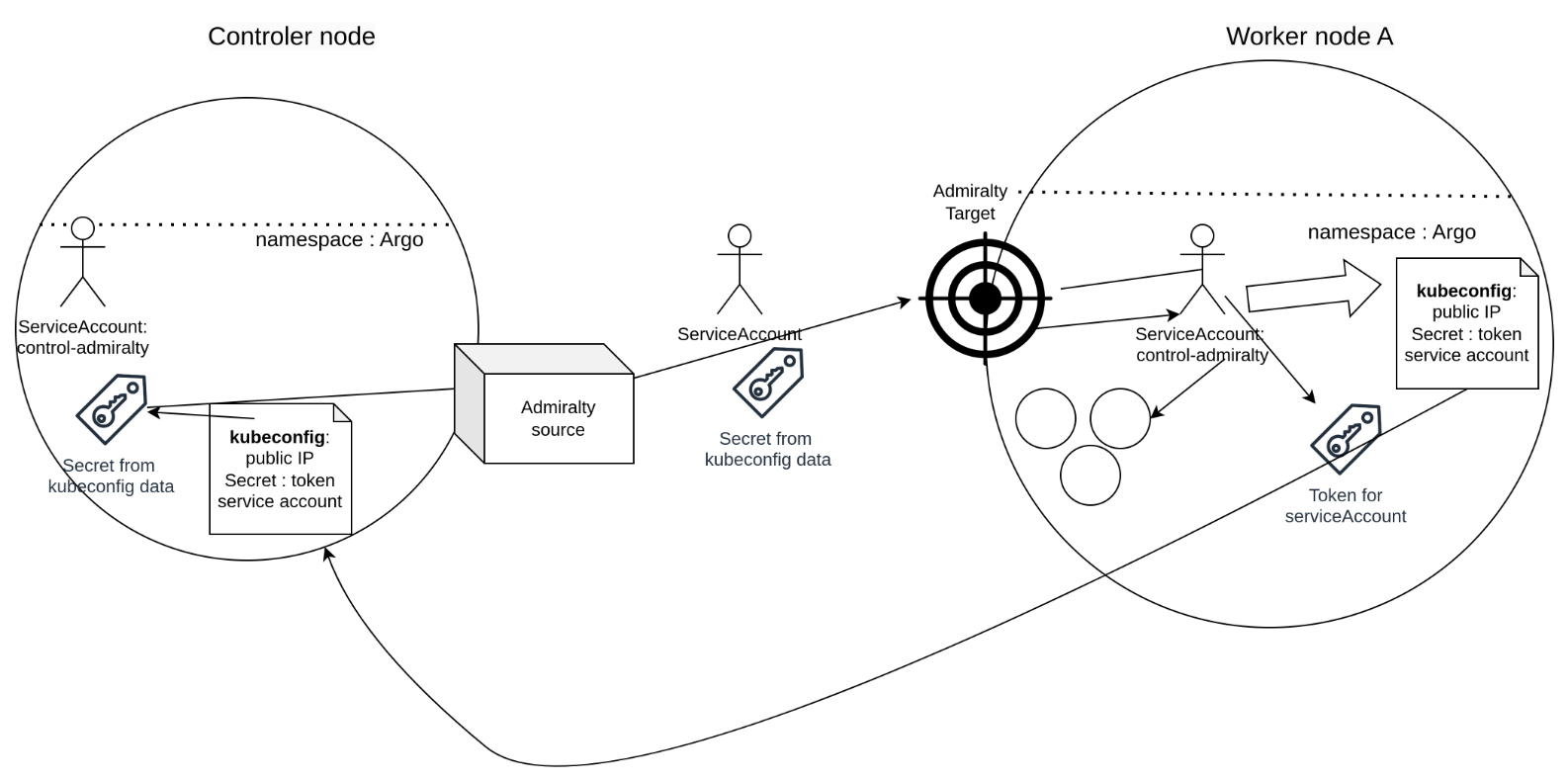

We are currently able to authentify against a remote Admiralty Target to execute pods from the Source cluster in a remote cluster, in the context of an Argo Workflow. The resulting artifacts or data can then be retrieved in the source cluster.

In this document we present the steps needed for this authentication process, its flaws and the improvments we could make.

Requirements

Namespace

In each cluster we need the same namespace to exist. Hence, both namespace need to have the same ressources available, mmeaning here that Argo must be deployed in the same way.

We haven't tested it yet, but maybe the

versionof the Argo Workflow shoud be the same in order to prevent mismatch between functionnalities.

ServiceAccount

A serviceAccount with the same name must be created in each side of the cluster federation.

In the case of Argo Workflows it will be used to submit the workflow in the Argo CLI or should be specified in the spec.serviceAccountName field of the Workflow.

Roles

Given that the serviceAccount will be the same in both cluster, it must be binded with the appropriate role in order to execute both the Argo Workflow and Admiralty actions.

So far we only have seen the need to add the patch verb on pods for the apiGroup "" in argo-role.

Once the patch is done the role the serviceAccount that will be used must be added to the rolebinding argo-binding.

Token

In order to authentify against the Kubernetes API we need to provide the Admiralty Source with a token stored in a secret. This token is created on the Target for the serviceAccount that we will use in the Admiralty communication. After copying it, we replace the IP in the kubeconfig with the IP that will be targeted by the source to reach the k8s API. The token generated for the serviceAccount is added in the "user" part of the kubeconfig.

This edited kubeconfig is then passed to the source cluster and converted into a secret, bound to the Admiralty Source ressource. It is presented to the the k8s API on the target cluster, first as part of the TLS handshake and then to authenticate the serviceAccount that performs the pods delegation.

Caveats

Token

By default, a token created by the kubernetes API is only valid for 1 hour, which can pose problem for :

-

Workflows taking more than 1 hour to execute, with pods requesting creation on a remote cluster when the token is expired

-

Retransfering the modified

kubeconfig, we need a way that allows a secure communication of the data between to clusters running Open Cloud.

It is possible to create token with infinite duration (in reality 10 years) but the Admiralty documentation advices against this for security issues.

Ressources' name

When coupling Argo Workflows with a MinIO server to store the artifacts produced by a pod we need to access, for example but not only, a secret containing the authentication data. If we launch a workflow on cluster A and B, the secret resource containing the auth. data can't have the same thing in cluster A and B.

At the moment the only time we have faced this issue is with the MinIO s3 storage access. Since it is a service that we could deploy ourself we would have the possibility to attribute naming containing an UUID linked to the OC instance.